Oh yes, and my fresh install of Ubuntu Budgie 18.04 had the same crash message. As it also did when I upgraded it to 19.04.

Good finds! If native games are working, it may be some type of trick you have to do on those specific games that crash or give weird errors. A weird example from my Windows era was trying to run Titan Quest and being greeted with a “No Virtual Memory Found!” error… which 16 GB of available ram

About OpenGL/Vulkan running better/worse: Vulkan doesn’t automatically make things run better. What it does for Linux users is offer a way to make Directx games run through Vulkan. So… going from not working to working in whatever way means an infinite boost in performance! When comparing Graphic APIs by themselves, it depends on how they programmers actually put them in the game (for example, I feel like Vulkan on Dota 2 is kinda poorly made).

There’s a small chance that you have incompatibilities due to Budgie. I quickly checked and saw it’s based on GNOME. Most complaints come from KDE (Plasma) users + games, but I’d keep an eye on that subject.

If ACO worked before and is buggy after applying Padoka, there may be some incompatibilities with that specific Mesa version. I remember three specific cases:

- Default repository (ubuntu/linux mint) giving me very poor or unusable DXVK, but working Dota 2

- Padoka giving usable DXVK and Dota 2

- oibaf with new features and better DXVK, but breaking Dota 2

and whatever other combination of those. The point being: sometimes a DXVK version and a Mesa driver version may mismatch, causing some things to break. In extreme cases (oibaf being EDGING), it may also break native Vulkan, which is not right at all.

Yep. I’ve given up on ACO and Star Wars Battlefront for the moment. Most things I want to run are running appart from that, and quite well too. So I can’t really complain.

Only thing is, the what seems to be the video driver crashing, is really annoying.

When a game’s not running that well like for me with Doom 4 in OpenGL mode, and for example I was able to get my install of Spellforce 3 working again after dropping back the wine version to 4.9 and the DXVK version to 1.2, but it was still unstable enough to hang and take out the video driver with it.

My system is still running, sound is still going, hard drive light ticking away, but I have to power it off at the switch because the keyboard isn’t responding to commands because the whole desktop session seems to have been taken out by the video driver crashing.

I’m concerned that I’ll end up with file system errors if I have to keep powering off the PC when a game crashes the video driver.

Maybe you can try Unigine Superposition as a graphics driver tester, instead of games. If games run for a while and then break, it’s useful to check your wine logs on it (and activate wine debug, etc etc).

Keep in mind that there are a bit of incompatibilities between DXVK and Wine versions. 1.0 and 1.3 seem to have been big versions, so they require specific Wine versions on them (not sure which).

Just to be clear, your system only has 3400g as cpu+gpu, right? The hangs do sound like mesa-vulkan-drivers bugs. If you have that and mesa-vulkan-drivers:i386 updated, maybe you need even higher (git) versions. One of my tedious processes was to use oibaf and go to https://gitlab.freedesktop.org/mesa/mesa to cross-reference oibaf’s versions with specific commits. For example, if oibaf says “mesa-vulkan-drivers 1.9999~oibaf~abc123”, I’ll go to that repository and check the latest commit starting with abc123. Sometimes there would be fixes for Vega integrated graphics. In my early 2400g days, the whole computer would freeze: nothing would respond, sound would get stuck and only a hard reset would solve it. That was caused by the kernel, probably a junction of amdgpu+2400g cpu drivers at that time.

Ultimately (ugh), try Manjaro or Fedora. There’s a small chance their way of packaging stuff helps you set everything up more easily.

Great suggestions as always Dan1lo

A quick note, before I go out, because I just got ACO working!

I was looking at the graphics settings wondering if there was something I should turn off that the radeon driver doesn’t support.

Then the penny dropped that the answer for a workaround was obvious:

Turn down the settings that use video ram!

Which is usually the textures. So I did that, and now it’s running solid. It runs nicely too, except that I have sad, low res looking textures:

Star Wars Battlefront doesn’t have a VRAM visualiser, so I’ll just have to use the force…

To stop the crashing I noticed that the VRAM usage needs to be well below the 2GB limit, as the OS etc probably use some too I guess.

Hmmmm:

‘Unified memory: no’?

Sounds like maybe it’s disabled the function for sharing the available RAM with the GPU? I’ll have to dig around in my UEFI for a bit.

glxinfo | egrep -i ‘device|memory’

Device: AMD RAVEN (DRM 3.32.0, 5.2.3-050203-generic, LLVM 9.0.0) (0x15d8)

Video memory: 2048MB

Unified memory: no

Memory info (GL_ATI_meminfo):

VBO free memory - total: 1210 MB, largest block: 1210 MB

VBO free aux. memory - total: 3026 MB, largest block: 3026 MB

Texture free memory - total: 1210 MB, largest block: 1210 MB

Texture free aux. memory - total: 3026 MB, largest block: 3026 MB

Renderbuffer free memory - total: 1210 MB, largest block: 1210 MB

Renderbuffer free aux. memory - total: 3026 MB, largest block: 3026 MB

Memory info (GL_NVX_gpu_memory_info):

Dedicated video memory: 2048 MB

Total available memory: 5120 MB

Currently available dedicated video memory: 1210 MB

GL_AMD_performance_monitor, GL_AMD_pinned_memory,

GL_EXT_framebuffer_object, GL_EXT_framebuffer_sRGB, GL_EXT_memory_object,

GL_EXT_memory_object_fd, GL_EXT_packed_depth_stencil, GL_EXT_packed_float,

GL_NVX_gpu_memory_info, GL_NV_conditional_render, GL_NV_depth_clamp,

GL_AMD_pinned_memory, GL_AMD_query_buffer_object,

GL_EXT_gpu_shader4, GL_EXT_memory_object, GL_EXT_memory_object_fd,

GL_MESA_window_pos, GL_NVX_gpu_memory_info, GL_NV_blend_square,

GL_EXT_memory_object, GL_EXT_memory_object_fd, GL_EXT_multi_draw_arrays,

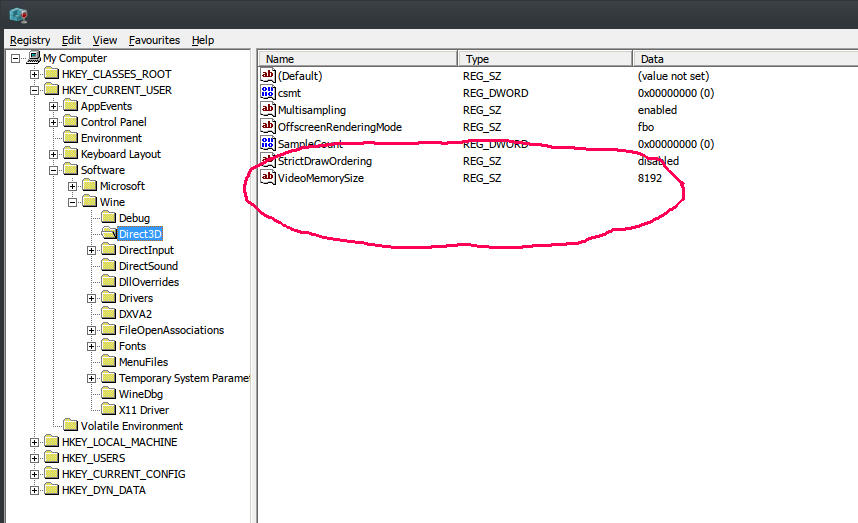

Don’t forget you may need to set your video ram in the registry for your wine prefix. You can google that, and I think you can actually set that number higher than your actual video ram, as long as you have the system ram to back it up.

Oh cool, thanks Llewen  I’ll look into it.

I’ll look into it.

Rats. Doesn’t seem to make a difference with Assassins Creed Odyssey.

Perhaps there are other settings like that somewhere I can make use of to try to get the ram sharing to work.

Ok, just for anyone else looking for this info, this is a screen of the registry where you can set your video ram. Winetricks only allows you to set it at 2048, but I have a lot of video ram so I am able to set it higher without issues.

I think a lot of newer games will require more than 2048 of video ram.

Yea, you can easily use 4gigs of video ram or more.

Do you have to set it to specific sizes, or can you just enter any number and it will use that as the video ram limit?

That VideoMemorySize is really old and although I advise trying it, I also advise toggling it on and off and it doesn’t work as intended. Since it’s a Wine workaround, and not related to DXVK, I’m not sure how both interact. Also, I remember seeing that workaround applied to older games, I don’t know how it behaves in newer games. (I’ve had a long journey troubleshooting audio and video for Wine games and most old answers don’t properly solve my things and end up generating additional problems)

Unigine Superposition Benchmark can reach big values of VRAM usage because it supports 4k content, so you can test your system VRAM settings there (even if the benchmark itself runs at 1fps). If that works, but your games don’t, then it’s a Wine/DXVK thing.

I’m not sure tuxclocker supports showing the current VRAM usage, but having some non-wine game running and calling the terminal to check your VRAM stats could help debug that front. The slightly bad thing about this tool is that you have to compile it yourself. If it’s too much trouble, ignore it and simply ‘get a huge game to run ALL MAX natively’ to test.

At this point, I think you can change the title of this thread. 3400g doesn’t seem to be the source of the problem. Maybe it happens to all APUs. ACO seems to be one of the sources of the problem, because of how its engine handles vram and stuff.

If you want a bit more information on VRAM usage and APUs, I really like this small benchmark, showing that 256MB to 2GB doesn’t really matter, because your system will try to use as much as it can. The point of the BIOS/UEFI settings apparently being to reserve it. If you’re rocking more than 8GB of ram and have nothing but your game running, you’ll have no trouble setting your UEFI to 256MB.

Now, if you got yourself into a working point, I suggest enjoying your games ^^ troubleshooting/benchmarking can be addicting!

Ah good, yea, Unigine Superposition works great on extreme settings, and it’s showing N/A MB for the amount of video RAM.

Running the Windows version under Lutris, it’s shows the available vram as 5120MB, but crashes when I load any more than 2GB vram worth of graphics.

With DXVK off, it shows available VMRAM as 2048MB, and also crashes.

Running via OpenGL however, both DXVK on, and DXVK off run fine with any amount of VRAM allocated.

So this looks like a vram limitation related to running directX under wine.

So I suppose if I get a bit more information on this, I could post it to the wine developers and see if they’d be able to patch it some time?

Actually Superposition running under directx with DXVK off seems to be loading pretty consistently. I thought it crashed one time I loaded it, but I can’t reproduce that now. So maybe it was just a once off.

So in that case it’d have to be just a DXVK vram issue with directX.

It’s consistently crashing with directx and DXVK version 1.3.1, so I’ll try some different DXVK versions and see what happens.

-Edit, yea all the DXVK versions seem to have this issue, and the reported vram stays at 5120MB, regardless of the wine registry tweaking.

I can confirm that Superposition recognises the vram registry change when DXVK is set to disabled.

That methodology and those findings seem to be worthy of going into the DXVK github and opening an issue.

If you can run through Wine, but not DXVK, DXVK may have some limitations. Either because you’re using AMD, or because you’re using an APU or because DXVK is getting your graphics card wrong.

Remember how you’re getting reports of an ATI Radeon HD 5600 series GPU in your ACO screenshot? You’ll see in this Wikipedia table that all 5600 series have, at most, 2048MB of VRAM. Maybe you can change your graphics card name as seeing by DXVK and it will unlock that VRAM size. I’m sorry I can’t offer the step-by-step on this last bit, but I remember reports of 'gpuID spoofing" or whatever it’s called for people having trouble with graphics cards not being correctly recognized.

I wouldn’t be surprised if 3400g GPUs aren’t correctly recognized by DXVK correctly. It took some time for my 2400g to go from ATI Radeon HD to AMD RAVEN VEGA! (again, worthy of pointing out as a github issue and testing with your Unigine Superposition methodology)

It looks like I need to place a dxvk.conf file in my prefix to do the spoofing. I referenced it in my environment variables under the settings for the ACO Lutris prefix, but I don’t know what the codifcation of the different GPUs is. So I’ll look into that later.

Meanwhile, I found some interesting results when I managed to load up gallium_hud and dxvk_hud.

I noticed that DXVK_HUD is showing the use of more than 2GB vram in some of my steam proton games.

I also noticed that I can’t seem to find any examples of OpenGL games using more than 2GB vram via Gallium_HUD, even in Unigine Superposition when I max out the graphics settings.

This makes me think that perhaps the OpenGL programs are somehow being limited to less than 2GB by the driver or whatever it is that’s managing vram allocation in OpenGL apps.

So this leaves me curious as to what’s going on with DXVK, or with my hardware. Even though my system is automatically limiting itself to less than 2GB for native and regular wine games via OpenGL, DXVK games can go above that, so I suppose it must mean that my system’s able to handle more than 2GB vram usage in Linux, but for whatever reason it’s not working with DXVK in Lutris.

Possibly Valve have already found this issue in DXVK, and patched it for Proton without notifying the DXVK devolpers about it or something.

Otherwise I’m not sure what else the problem could be.

If I could get a vram monitor that works for native Vulkan games like Rise of The Tomb Raider, I’d then be able to know whether the issue is just DXVK, or if there’s some issue with Vulkan not allowing more than 2GB vram like OpenGL seems to be doing.

In which case then could be causing DXVK to crash because it’s trying to allocate more vram than Vulkan is allowing?

DXVK_HUD:

GALLIUM_HUD:

If this is true, many games should be crashing, instead of simply ACO, when using DXVK.

Once again, Tuxclocker should do it for you, for your AMD card. GreenWithEnvy if you were NVidia.

Since I’ve seen this issue every once in a while in the web, it feels that a few games aren’t programmed correctly or something, so they don’t behave well with DXVK and its VRAM management. I’m proposing you spoof your graphics card name, because that can be an issue, and maybe monitor vram usage to see if it’s game-dependent or not.

Once again, I clearly remember having Path of Exile mention ATI Radeon 5000 series first, and only recently showing AMD RAVEN RIDGE VEGA or something. I recommend researching it and possibly posting a bug in the github repository, because the DXVK owner may simply not have implemented those new names and GPU IDs yet, since he mostly uses NVidia stuff, afaik.

Mmm. Yea, it does seem to be happening in multiple games. I just tried loading up the Shadow of The Tomb Raider using DXVK in Lutris, and it crashes when the vram using is higher than around 2300MB, I tried it under Steam Proton and the result is the same.

So it does seem there’s some games that can run with more than 2GB vram, and others that can’t.

I still can’t find where to get the codes for the GPU spoofing, it’s supposed to work by adding "dxgi.customDeviceId = " to dxvk.conf in the prefix folder, then adding it as an environment variable under the system options tab in the Lutris prefix configuration.

I’ll look more extensively later some time and see if I can spoof a GPU that’ll fix the problem.

A simple route would be to look for an issue OR Open one, if it doesn’t exist, about how your 3400g is being labeled as radeon hd 5600 instead of amd raven ridge vega (or whatever) and ask if that can make your VRAM look limited.